A recent update to 3ie’s Impact Evaluation Repository shows that there are 4,260 records of published development related impact evaluations in the world as of September 2015. The number of impact evaluations focused on PNG and Pacific island countries is 18, representing a less than a half of a percentage point share of the database. Why is this number so low? Should we be concerned?

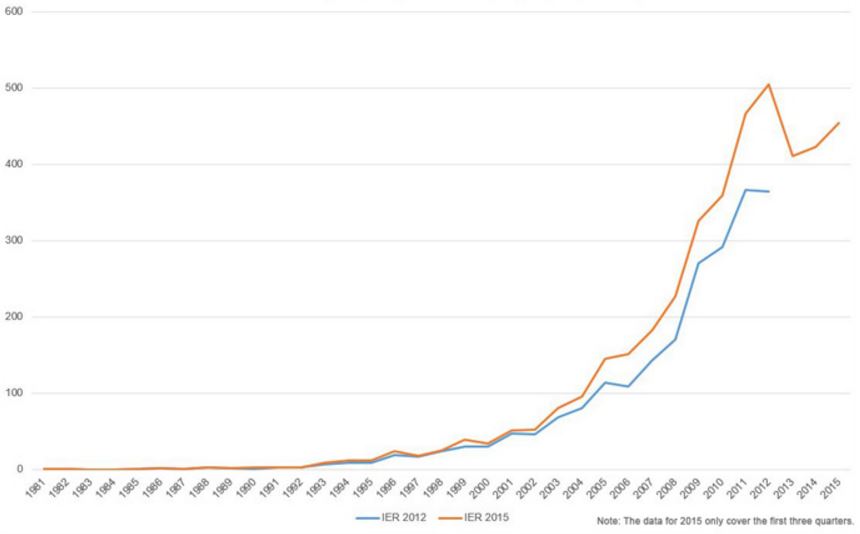

The total number of published impact evaluations focused on development issues has rocketed up over the last 15 years (see chart below), reflecting an increased emphasis on development research focused on what works, what doesn’t, and reasons why (see here [pdf] for a primer on impact evaluations and experimental approaches to evaluation).

Number of development impact evaluations published each year (1981-2015)

Source: Miranda, Sabet, and Brown 2016

The main criterion for including impact evaluations in the 3ie repository is that they need to identify and quantify causal effects on outcomes of interest (see here [pdf] for 3ie’s repository screening criteria). It is important to note that most operational evaluations undertaken as part of donor based development programs are not able to meet 3ie’s repository criteria. This often reflects a focus on program inputs or outputs rather than outcomes; a lack of data collection on outcomes both before and after the life of the program; and an inability to make appropriate comparisons in outcomes data between those that received treatment from the program and those that did not.

Before trying to answer the questions posed above, it is useful to look at the 18 PNG and Pacific impact evaluations in more detail (you can download a summary of them here [pdf]).

A number of the papers are published by the same authors which indicates that there are significant hurdles for researchers focused on PNG and the Pacific to be involved in impact evaluations. Also, the focus of papers is on health, labour and migration (the last two falling under the “Social Protection” sector), which partly reflects the concentration of authors and the likelihood that authors tend to focus on similar issues over time. It is also possible that these particular sectors are also more amenable for impact evaluation.

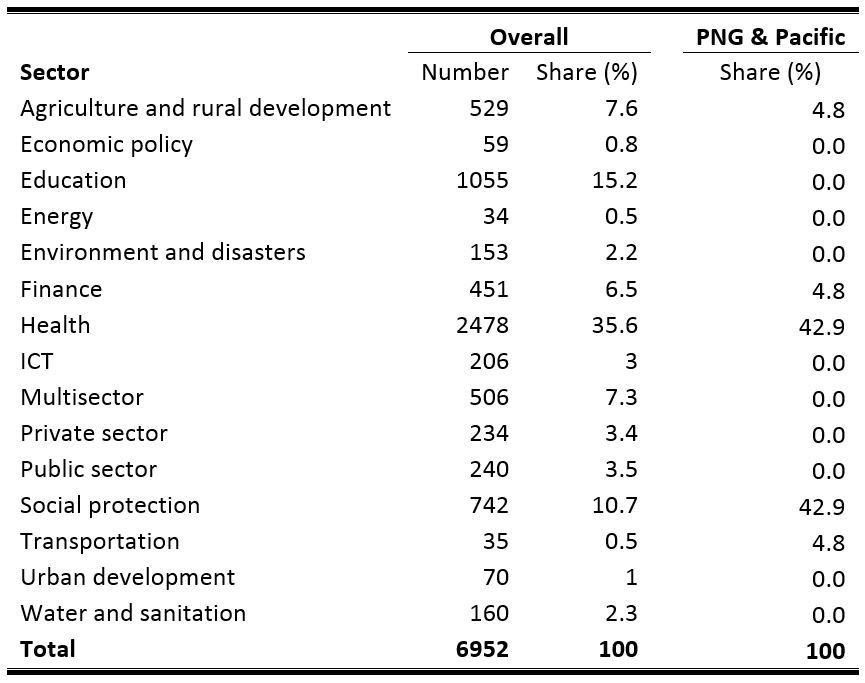

To see if there are major gaps in the sectors covered by the 18 impact evaluations focused on PNG and the Pacific, it is helpful to look at the distribution of sectors covered by the entire repository of impact evaluations (see Table 1 below; note that one paper can focus on a number of sectors). It indeed appears that there are major gaps; for example, the education sector is the second most frequent category in the repository, representing 15% of all sectors, yet none of the 18 impact evaluations are education focused. There is also only one paper focused on PNG, by far the most populous country in the region.

Table 1: Topic distribution of papers in 3ie’s Impact Evaluation Repository

I’ve focused on three reasons why there are so few impact evaluations focused on PNG and the Pacific.

First, it seems that there is little demand for impact evaluations in PNG and the Pacific, or that development policies or programs in the region are not usually implemented in ways which allow for an impact evaluation to take place. For example, at Devpolicy’s recent forum on aid evaluations by the Office of Development Effectiveness (ODE), we heard that a major finding in ODE’s report on investing in teachers [pdf] was that there was

“…almost no data on outcomes that could be attributed to DFAT’s teacher development investments. In most cases it was impossible to judge whether teacher development had led to improved teaching practices or improved learning outcomes for pupils.”

Second, even if an impact evaluation in PNG and the Pacific were to take place, the findings may not be seen as having much value. For example, an education research proposal I was involved in, which focused on a number of Pacific islands, was rejected partly because of issues to do with external validity: even if we were able to identify important policy impacts, there would be no guarantee that the findings would be applicable or scalable in other contexts around the world. It seems, at least in this case, that the Pacific islands are just not important enough when it comes to justifying funding for impact evaluations.

Third, the cost of impact evaluations can be very large. This is a problem in PNG and the Pacific because its people and geography are highly disbursed which means that research costs are higher than in most other parts of the world. At the same time, the region’s relatively small population means that the overall amount of funding available for research is also small.

Are 18 impact evaluations in PNG and the Pacific too low? It could be argued that most development policies, particularly those funded by international donors, are based on rigorous evidence on impact drawn from other parts of the developing world, and hence there is little need for impact evaluations to be carried out on programs in PNG and the Pacific. However, I believe this number is too low because rigorously demonstrating the effectiveness of foreign aid in our immediate region is important for its sustainability (see here [pdf] for Australia). Furthermore, the political, geographic and economic challenges in PNG and the Pacific often mean that what works elsewhere in the world cannot be relied on to inform development policy in PNG and the Pacific.

How can more impact evaluations be encouraged? Greater recognition of the importance of measuring causal effects on outcomes would help. This would need to come in large part from international donors.

A small number of aid investments in PNG and the Pacific should incorporate a strategy at the concept and design phase to collect outcomes based data (before and after implementation) and include an appropriate comparison group so that impact on outcomes can be inferred. One way to encourage this would be for ODE to have greater involvement in concept and design across Australian aid investments, since the collection of this type of data would substantially improve the quality of operational evaluations that ODE supports.

Related to this, ODE should better recognise that the quality of operational evaluations suffers when appropriate outcomes based data are not available. For example, in ODE’s recent review of operational evaluations [pdf] of Australia’s aid investments completed in 2014, it found that 77% had adequate (or better) credibility of evidence. However, the lack of impact evaluations in PNG and the Pacific seems to indicate more generally that credible evidence is not being generated.

Beyond international donors, it is clear that developing country governments in the region need to play an increased role in facilitating impact evaluations. While these governments cannot be forced to do this, it is important that the international community highlights the benefits of finding out whether policies are having an impact or not, and devote resources and technical capacity for the collection of outcomes based data to improve the evidence base of policy decisions in PNG and the Pacific.

Impact evaluations should be used as a key measure of performance in development. They cannot be substituted by in-house subjective based measures of aid quality, nor should they be an afterthought in the standard monitoring and evaluation process. If we’re serious about improving the effectiveness of aid to PNG and the Pacific, then impact evaluations cannot be overlooked.

Anthony Swan is a Research Fellow at the Development Policy Centre.

The table doesn’t seem to capture any of ACIAR’s impact evaluations – is there a reason for that? Are they not considered to be methodologically sound? There are quite a few for the Pacific – see here

At least one paper in that ACIAR series uses the term impact evaluation in a way that does not seem consistent with 3ie’s criteria; other papers in the series are about “impact assessments”, which also do not seem consistent 3ie’s criteria. From limited sampling, it is likely there is an issue with the methodology from a 3ie perspective, although I’m not saying there is any wrong with the methodology based on the objectives of the ACIAR papers. On the other hand, the ACIAR series simply may not meet 3ie’s criteria on publication.

It is not for me to tell 3ie how to categorize things, but as an author of several of those 18 papers I wouldn’t consider many of them as “impact evaluations”. By my definition, perhaps only the financial literacy study, which had explicit investigator-driven randomization.

We would hope that all econometric studies that hope to uncover causal effects would be using good research designs that are not (too) prone to omitted variable bias (aka selection effects). Thus, I would think that the work we did on transport access and poverty in PNG using an IV strategy would qualify despite 3ie omitting it. When there is randomization available, as with the migration lotteries, it is good to use it. But it is hardly the silver bullet that too many development people think it is (“what works” should be more properly called “what worked, in that particular context when implemented by those particular people” because absent a theory as to why it worked there is no guarantee that it will work elsewhere – e.g. Rozelle et al Stanford REAP have new results on deworming that show no effect on school performance in rural Western China, contrary to the very publicized effects in Kenya).

Your reminder that these impact evaluations at best tell us “what worked” rather than “what works” is a good one. We should be looking to draw evidence from a many evaluations rather than a very small number. That is why 18 (plus or minus a few) for PNG and the Pacific isn’t going to tell us very much.

For those interested, here is a discussion paper on impact evaluations from AusAID / ODE.

The paper is from 2012 and shows that greater use of impact evaluations was on the radar. Perhaps the embers of the Impact Evaluation Working Group are still warm?