From June to August of this year, we quizzed the people who partner to deliver the $5 billion Australian aid program. 356 non-government and contractor executives and staff , government and multilateral organization officials, academics and consultants participated in our survey. And now the results are in. You can find the full report, the executive summary, a one-page summary, and an op-ed here. Or listen to the podcast of last week’s launch.

No similar attempt to survey Australia’s aid stakeholders had been undertaken, and we had no idea how ours would go. We were delighted to get 356 respondents, and with the richness of the results.

This exercise has certainly had its critics so, before we get to the results, let’s run through some of the objections commonly made to it.

One comment that has been made is that we shouldn’t trust the results based on a self-selected sample. In fact, the survey was run in two phases, the first of which was not self-selected, but based on a sampling frame for Australian development NGOs and development contractor executives. For these two important groups, in addition to a sampling frame we had a good response rate, and thus very representative results are possible from the 105 responses we obtained in this phase. For other groups involved with Australia’s aid program (multilateral agency officials, Australian and developing country government staff, academics and consultants) we were unable to draw up a sampling frame, and so did indeed rely on self-selection. These results from the 251 who participated in the second phase are less reliable, but still of great interest. One of the striking features of the survey is how many issues different stakeholder groups are in agreement. This makes us think those who self-selected were not the disgruntled few, but the silent majority.

Second, some people have labelled the survey as one of “vested interests,” the implication being that we should not be interested in or cannot trust results obtained from a survey of people who are positively predisposed to, and perhaps obtaining a living from, the aid program. Indeed, ours was deliberately a survey of those actively involved in the aid program (80% of respondents), and knowledgeable about it (100% self-assessed their knowledge of the aid program as average or better; 80% said they had strong or very strong knowledge). That was the whole point. We wanted to quiz the people the Australian government entrusts with our aid dollars. We should be interested in what they have to say.

Third, we have sometimes been asked: wouldn’t it have been better to survey the beneficiaries of Australian aid (the overseas poor) or the funders (the Australian tax payer)? Of course, such efforts are useful and important. There have been several public opinion polls about Australian aid, and some attempts to poll Australian aid beneficiaries (e.g. the RAMSI People’s Surveys). While there is no doubt that there could and should be more such efforts, we should also accept that aid is a complex and difficult-to-observe business and that aid insiders are far and away the best informed about aid effectiveness. This survey seeks to give the aid insider a voice.

A fourth criticism is that we should not take seriously the results of a perceptions survey. Just because most people think that something is so does not mean that it is. True, but perceptions surveys are widely used to benchmark public services (through citizens’ report cards), and to assess multilateral aid agencies (through the MOPAN initiative). In many cases, there is no substitute for expert judgement. That is why external aid reviews, such as the 2011 Independent Review of Aid Effectiveness, rely heavily on submissions and hearings. Our survey systematizes that process, and puts it in a format which can be carried out more frequently. Given the difficulties of assessing aid effectiveness any other way, asking the experts is certainly worth trying.

Given this thinking, you won’t be surprised to learn that we did indeed have a strong focus on aid effectiveness in our survey. We got very mixed results on this front. We can divide this news into the good and the bad. In this post, the first in a series in which we’ll present you with the results, we bring you the good news.

We had five general questions relating to the effectiveness of the Australian aid program. We asked people how effective they thought the aid program was. We had a whole series of questions relating to respondents’ own engagement with the aid program, and in that series asked them how effective they thought their own activity was. We asked them to compare the effectiveness of the Australian aid program with that of the average OECD donor. We asked them whether they thought Australian aid effectiveness was improving, and what impact the scale-up in aid had made in regard to aid effectiveness.

The figure below summarizes the answers they gave. All these questions were answered using a scale that ranged from very negative, to negative, to neutral, to positive, to very positive. The columns show the proportion of participants that responded with each option. We can also assign each response a score from very negative (1) to very positive (5). Averaging across all respondents gives us an average or overall score, where 5 is the maximum, 1 the minimum and 3 a bare pass. These scores are shown by the line graph in the figure below. Finally, the error bars around each score show the range for each respondent group: the NGO executives; the development contractor executives; and the self-selected groups, which we divide into: academics, Australian government officials, contractors and consultants, multilateral and developing country government officials, and NGOs.

Responses of stakeholder groups across different effectiveness questions

You can see that participants are very positive about their own activity, pretty positive about the aid program as a whole and think that Australian aid is as good as or better than the average OECD donor. These positive responses are not all that surprising given the background of the respondents. But it is encouraging that most aid practitioners and experts think that aid effectiveness is improving, and that the scale-up of aid is having a positive, not a negative, impact on aid effectiveness. You can also see from the error bars that different stakeholder groups hold pretty similar views.

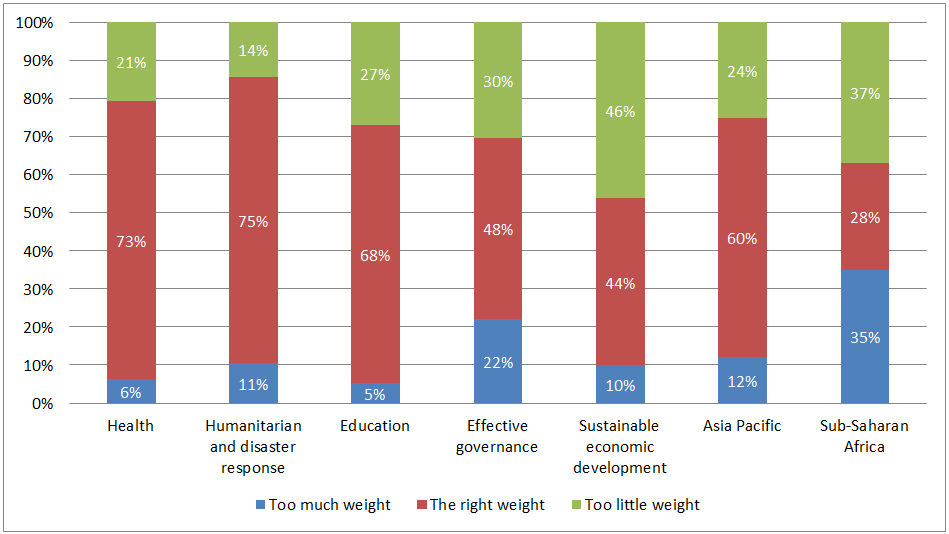

It isn’t just at the general level that stakeholders are positive. They also think that the aid program has its sectoral and geographic priorities about right. We asked respondents whether they thought the right weight (or too much, or too little) was given to different sectoral priorities by the aid program. Most responded that they think we have it right on health, education and disaster response. Views are more divided on governance and, interestingly, on economic development. Stakeholders think this latter is either given the right weight or not enough weight, suggesting some sympathy for the new Government’s plans to give this priority a higher profile. (Remember, the survey was conducted before the election.) We also asked about geographic priorities. Views are very divided about whether we give too much or too little aid to Africa, but, more importantly (given the relatively small size of the Africa program), there is majority agreement that we had the focus right on the Asia-Pacific.

Views on sectoral and geographic priorities

Clearly, there is a lot aid experts and practitioners like about the Australian aid program. They are hardly wildly enthusiastic, and they certainly don’t think our aid is world class, but overall they are positive about the effectiveness of Australian aid. They think that the sectoral and geographic priorities of the program are largely right, and they would be sympathetic to the new Government’s efforts to give more weight to economic development.

That’s the good news. Unfortunately, there is bad to follow. Tune in for our next post tomorrow to find out what it is. Meanwhile, please have a look at our report and send in your comments. We plan to bring out a final version early next year.

A final word. Thank you if you were one of the 356 respondents who took time out from their busy schedule to fill in the survey. We hope you’ll find the results a good return on your investment.

Stephen Howes is Director of the Development Policy Centre. Jonathan Pryke is a Research Officer at the Centre.

This is the first in a series of blog posts on the findings of the 2013 Australian aid stakeholder survey. Find the series here.